This blog is part 2 of a series. For the introduction post, click HERE

It would be nice if you could load website tracking and customer profile data into your data warehouse just like you would do with invoices from SAP. But this data is big! Global companies attract millions of visitors on their websites. Each visitor generates dozens of hit records that end up in Google Analytics. And visitor profile data gets delivered by BlueConic every 5 minutes. This calls for new solutions and we will detail two of them below.

First there’s Google Analytics (GA). Among other thing, it stores data on the pages visited, website interactions and items added to the webshop carts. As a result of positive previous experiences, we decided to use the BigQuery Export functionality. This means that daily extracts of GA data are exported to Google’s Data Warehouse in a tabular format with some nested fields.

It is relatively easy to set up a connection between Google Analytics and BigQuery. The first step is to prepare a Google Cloud Platform (GCP) enabled account and create a project in it. Once that is done, you just need to use the created GCP Project ID and link it in Google Analytics admin settings. In our experience it is important to make the decision upfront in terms of how frequent you want the Google Analytics data exports to BigQuery. You will have the option to: (1) export in batch, (2) export continuously. More explanation in terms of timings and pricing to be found here. One observation is that GA data usually exports to BigQuery during the morning of the time zone setup per individual GA account. Although the timings of GA Batch Export option are quite consistent, there are also cases where the exports can be delayed for 1 or 2 hours.

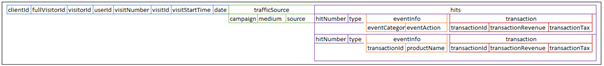

The next step in BigQuery would be designing the SQL queries to extract the Google Analytics data exported to Google’s Data Warehouse. One unique characteristic of the GA data format exported to BigQuery is that data is stored in a nested manner. Hence not all segments of data are inter-related and require proper design to flatten and normalize the data before it is ready to be ingested by the data warehouse. There are two types of SQL versions in BigQuery: (1) Legacy, (2) Standard. Typically, it is recommended to use the Standard version as it has more features such as subquery and external sources reading.

Google BigQuery has a nested data structure

Considering that we wanted to load this vast volume of data into Azure Synapse on a daily basis, we implemented Polybase loading. The data from GA gets queried and stored in Azure Blob Storage in CSV files on a daily basis. These files can be mounted in Synapse as External tables, and this setup is really easy! With the help of some tutorials we created a file format, external data source, credential and external table definition. Once the latter is done, you can simply query the files with SQL as if they are tables in the data warehouse. Ready to be processed further in Synapse, which the next blog will be about. One important thing to keep in mind is that Synapse can be quite picky, you need to fix text delimiters and carriage returns in your CSV files before exposing them.

If you have very specific reporting requirements, Google BigQuery might not have the information you need. Then the Google Analytics reporting API is the way to go. You can build your query with a limited number of measures and dimensions in python, run the code and store the data somewhere. During the course of the project we tried both v3 and v4 version of the API and discovered that v4 had many more features than v3, such as maximum rows return per queries (10000 (v3) vs 100000 (v4)).

When we were looking for a service to host the Python code on a daily basis, we initially shortlisted a few options such as Alteryx, Azure Batch, and Azure Function App. In the end, we chose to use Azure Function Apps for several reasons:

- Cost effective (Azure provides a free grant of 1 million executions per month)

- Integrated security (Azure Managed Service Identity)

- Easy to deploy (You do not need to setup the infrastructure and Python environment manually)

A Function App is a serverless application in which a wrapper runs your code. You can choose between several programming languages (Python, .NET, Node.js). You don’t have to worry too much about the infrastructure and have several advanced security features at your fingertips! The function app needs to be triggered in some way, and this is one of the many options you have in Azure Data Factory! In this case we not only use Data Factory to trigger the Function App, but also to orchestrate further loads. However, Google Analytics reporting API has its limitations.

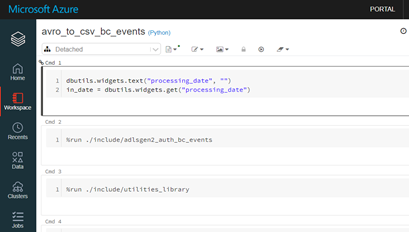

The other big data source we have had to tackle is BlueConic. It delivers profile data for website visitors, which is a lot as you might imagine. Using Azure Event Hubs, an integration was set up which delivers .AVRO files every 5 minutes into Azure BLOB Storage. This is a JSON-style file format we cannot tackle with our classic data ingestion tools. In close cooperation with some of our tech friends at Microsoft, we set up a notebook in Azure Data Bricks that processes the files and compiles them into CSV files in Azure BLOB Storage again. Once again, the orchestration is done by Data Factory. When the files are processed, we can again mount the CSV files and expose them to Synapse.

Databricks notebook workspace

Whoa, that’s a lot! Within a matter of weeks, we familiarized ourselves with Azure Data Factory, Databricks, Function apps and more! Now that we have some data available in our data warehouse, it is time to look at the next step, namely making it ready for reporting in Synapse. If you are looking for a demo of any of the aforementioned tools, or want to exchange your thoughts about them, please get in touch via the comments below.

authors: Paul Schoondermark & Kent Lai Zhi Yong